New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Release The Full Model! #16

Comments

|

Thanks for raising the issue! People have expressed similar sentiment internally and we take that argument seriously. Would love to see people start investigations with the small model and we will be re-evaluating our release of the larger models in the future. |

|

Actually, it seems more correct to leave this issue open :) |

|

plz release the models that support more languages |

|

Better safe, then sorry. If the experts want caution, the least we can do is respect their judgement |

|

https://blog.openai.com/better-language-models/:

|

|

Will you be releasing the English speaking unicorns to the public? |

|

We don't know enough about unicorns to say they aren't dangerous. We will release a unicorn fetus for the scientific community to study for now, and re-evaluate later. |

|

It's a pity, let me remind you the name 'OpenAI' well not so open is it? |

|

@superjayman i agreed, open and sharing is the core of open innovations and release all does not do any harm but improves it much more quicker. |

|

Thanks for exercising caution and pointing out that you did. Seems cool. Curious about focus. Can haz enlightenburger? |

|

Can you train this on a list of translated sentences (from english to japanese for example) and use it as an AI language translator? |

|

Isn't this exactly what OpenAI was not supposed to be about? Being closed source and up to the whim of PR teams and private incentives of a small number of people? Even for fake news, this would be a good tool. If you're going to believe something just because it's possible to say it using the english language, you're a fucking idiot. Check the source. Check peer reviews. |

|

I respect the decision, "with great power comes great responsibility". But I suggest the releasing of the 345M model. The reasons come in two folds: it is much better than the 117M but not nearly as good as the 1.5B model; it has a similar amount of parameters to the BERT-large-uncased model which makes it a good candidate to be compared with. |

|

Maybe the reason this git exists is the same that the team should release all and be 'open' but they are the ones who make the decision anyway. I just hope that it would be good for both of the team and us. |

|

There are a lot of things could be used in wrong direction in bad hands. But just imagine the positive feedback of your technology. Your fears are inevitably in anyway. |

|

https://news.ycombinator.com/item?id=19168712 please read the horrendous comments. well dont read all of them it gets depressing. but jesus christ. you are OPEN ai. do we really have to spell that out for you. O P E N |

|

Help I need this to help write my 9th grade essays |

|

Okay. A nonprofit writes an interesting product, which a for - profit could probably recreate and patent. Or am I wrong there? Why release a teaser only? A shrunk, non-trainable thing, just there to show off? I admit it. I am suitably impressed, but also seriously annoyed "open" in name only. |

|

It's worth remembering that openai has in fact been pretty good about releasing the code to their stuff. They've been much more open than deepmind, which I think was the concern that lead to their creation. This seems comparable to responsible disclosure in software security - when an open source group finds a bug in widely-deployed, un-updateable software, eg something used in routers or etc, that could be used for large scale spamming, they'll start work on ways to mitigate it before announcing what the vulnerability is. If someone who works for a FOSS company were to find a really efficient design for building software to do denial-of-service attacks, it'd be a similar story - look for DOS mitigations first. I'd say it's a comparable situation to the latter: OpenAI is worried that they've built a generally useful tool that could make a category of DOS attack much much worse, and they don't currently see anything preventing that from happening. I've been thinking that it might be good to get something like GPT2 1.5B into the hands of google and facebook and a few other major forum operators, maybe reddit, under a contract to use it for improving moderation. (edit to clarify: just giving them early access so they can use it to build safeguards against things like it.) It seems like GPT2 is good enough to take a serious crack at implementing xkcd's suggestion from nearly ten years ago: who cares if it's a human or a machine? the real question is whether it's malicious content. That proposal as it is wouldn't help so much with fake news, because people lying is a different problem than people doing a denial-of-service via vitriol, but it would make a big impact on a major source of the problem. Or perhaps the AI teams with enough resources could get together and talk about how to use this level of NLP performance to build other types of linguistic DOS mitigations. I am, for my own curiosity, quite irritated that it's not being released, but I agree that the performance is reasonably worthy of the concern. I just don't see not releasing it as being that useful unless the time until someone replicates it is spent making mitigations to the world that will be created when someone else has a copy. @WuTheFWasThat I do think yall could probably release the training code safely, though. Seems to me that it's the dataset and something like $40k worth of compute of trained model that are the real interesting thing here. |

|

I think you guys are scared of nothing, release the whole model please. It's not like you have 20,000 people who pulled this repo - so it's really hard to use this 'maliciously' Besides there are other alternatives that have produced similar (better) results than what this is - cakechat for example when fed the Reddit corpus (the same one that spooked you) you'll get some crazy things. But just like when you tell a young kid 'its just a movie' or 'just a game' - this is just a computer program. It's not some sci fi novel come to life. |

|

We want the red pill! |

|

The resources needed to train the full model are beyond the average person and small companies which could use this for potentially very interesting non-malicious applications. However large organizations and state actors that are most likely to use this for malicious purposes can and typically do already have easy access to the resources needed to replicate the full model. Therefore by not releasing the full model you are ensuring that this sort of AI tech remains in the hands of powerful organizations and state actors that are most likely to misuse it while at the same time unintentionally tricking the general public to think this tech is not "really" available yet. Releasing the full model & leveling the playing field is the right thing to do here. Please release the full model. |

|

So How Many Other Innovations Are You Guys Going To Keep Closed? Say next week you have an even bigger break-thru , will the full model now be superseded and seem less harmful and you may decide to release it?.. see it does not make sense, how do you put a limit on unknown capabilities? |

|

Everyone here could benefit from Nick Bostrom's |

|

I think at least you can open soon a challenge like i.e. Google's fake audio detection challenge and then release the full model after the community has a detection baseline. |

|

Well.. Here is what I predict will happen very soon and why. The thing your software can do will be replicated and released for the whole world within months, maybe even weeks. It will grow just like deep fakes and college students will be using it to write their finals in the fall. The media has blasted the fact that you have a new toy and you refuse to share. Now that people know what type of coverage they can expect for a full released version they will not care about consequences. They will get the publicity and the feedback they need to make it even better. From that point forward all the phone apps, diy personal assistance devices, and automated blog post generators will say powered by [insert company].. Yes that same company name will be associated with the fake Amazon reviews but when it comes to business and economics bad pablicity is still pablicity. The up side is that instead of "encouraging" the government and other agencies to address these issues they will be forced to. This train is coming and I am afraid that you putting pennies on the track is not going to stop it. Heck, my 15 year old uses python in ways it would never have occurred to me. Honestly, I personally couldn't pull this off without a team but I am sure there are investors out there that see dollar signs in being first. I am sure you have gotten some very interesting e-mails reinforcing that sentiment. If I were in your position I would reconcider my decision to release the full project or at least set a date. People tend to be more productive when they are up against the clock. 90 days would certainly be enough time for these big companies to prepare and more than enough time for governments to educate their patrons about the swarm of "fake news" headed their way. I read that last sentence and spit my drink out hahahaha.. Anyway, read my post in your meeting Monday morning and reevaluate your decision. Great job by the way. It must be awesome to see the results first hand. |

|

So basically, the adjacent dot in the image is a very close Next Word, to be used instead of other possible words? 195 seems to be ĉ... Like: I was eating a hamburger/nugget/apple/etc |

|

Refer to encoder.json |

|

What about encoder.json? What is in that? |

|

encoder/decoder for GPT-2. Translates words (fully or partially) to tokens and after creating output back I think. However, I don't know about full relation to vocab.bpe. |

|

vocab.bpe are parts of speech, BPE found them first, and these are encoding parts |

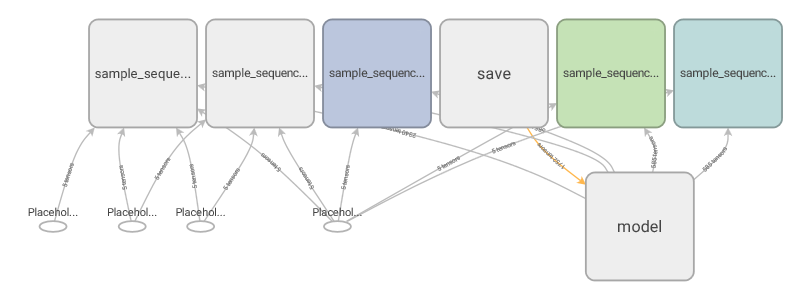

Both are used. Anyway, here: https://www.tensorflow.org/guide/graph_viz EDIT: OK, I did put the full path to metadata graphs. Here is the main result: And I think this part consist of my 3 TOP_P ranges: So with that it can be visualized what exactly happens in tensorflow and least. |

|

Is anyone tried asking gpt-2 model what happens when releasing such a potential resource to public? |

|

I see a lot of people are interested. We need two things;

1; as far I can see there is enough here What is the problem of releasing or mirroring the project? Funds? We can raise it. Knowledge? We have it. |

nothing would happen it is already available for those who have computation power (money) |

|

Everyone is already have their own in ther nogin, called GPT-3000, last I checked. And can hire teams to write fake internet news. Perfect hit and no false moves. |

|

My point was fake news isn't why openAI holds the algorithm back. We can fool humans way better, yet we are still here arn't we! I can write trash, too. I actually know why they didn't release, but am too kind to say it. |

|

First impressions on 774M model. The 6GB GPU can generate the sample in the same amount of time, however. Amount of samples in a batch needs to be reduced from 5 to 2. So overall the time required to generate similar amount of samples would be 2x (in case of 4 - 2x2) or 3x (in case of 6 - 3x2). The generated text seems to be better quality. I didn't test it enough yet but I can already tell there is a difference. Still can get some nonsense going on but to the lower extend and it seems to be more creative - I could select something of sense more often. The thing I'm curious is if it confuses people in the dialog as previous one was doing or how it handles completely weird stuff going on, which is to be tested (although I already started and it still mixes certain stuff there quite a bit). And yes, like in the paper, I can feel the reading comprehension being better, it kind of stick to the story better, I think? However, there is this weird feeling for now that it persist on a person's explaining/repeating stuff a bit over and over but in creative kind of way unlike previous medium model (to be tested further). |

|

Now we're never going to get it as the project has been archived, which is a shame :( |

|

Where has it been stuffed away? Point me there. I can still comment and download the zip. |

|

@bladedsupernova the readme: So yeah, you'll be able to download the code but it's unlikely we'll ever see the full model, which is a shame. |

|

Nividea made a 8B parameter model, 5 times morrrre powerful. I think github has it availble, maybe. it is the megatron language model by nividea At least we can try the 774M. |

|

lets pressure on Nvidia to release the trained model for experimentation |

|

HAHAHAHAHAHAHAHAHAHAHAHAHAHAHAHA |

|

If you wanted to build malware or whatever using GPT-2 you certainly could, the half model creates human readable text that 90% of the time is consistent enough to be believably written by the "average" person (considering the "average" person can't even get their vs there right...). If you had the money, or are a state actor, you can just hire people to write troll posts on the sosh me. Consider that with a public cloud provider charging $2.5 per GPU hour, running the full model to generate text in an efficient manner (on a cheapo VCPU it takes 2.5 minutes of CPU time to make a single paragrpah of text) is going to get expensive. I can't wait for the full model, but looking back on the press release for this project, I think the decision to not release the full thing was purely a marketing move. The full version will be cool, but it won't be 'groundbreaking'. But releasing a "half as powerful version" is a great marketing tool. I got hyped for the full release, but in all honesty I think i twill feel like going from 90% comprehensive to 92.5% comprehensive. You're definitely going to see diminishing returns for tensorflow stuff. This sets a bad precedent for an "open" project though. OpenAI isn't "half-OpenAI". |

|

I have no problem with you not releasing your model. But I do have a problem with you calling yourself Open AI, because it is misleading. If this practice continues you should consider renaming yourself to Closed AI. |

|

Can we close this issue now? |

|

The full model has been released |

|

THANK GOD. YOU GUYS WILL FINALLY SHUT UP. lol |

|

Well, this was a bit anticlimactic. After we got our stuff in the trunk, we walked down to the parking lot, where we got a quick ride back to the airport. So, at the very least we had a ride to get home, but I don't really think this was the right method of getting home. |

|

The full model won't work with 6GB GPUs. Not enough memory, so don't buy such if you plan to use 1558M model. 774M will work with 6GB though. |

|

Thanks.

…On Sat, Nov 9, 2019, 22:46 Krzysztof Bochniak ***@***.***> wrote:

The full model won't work with 6GB GPUs. Not enough memory, so don't buy

such if you plan to use 1558M model. 774M will work with 6GB though.

—

You are receiving this because you are subscribed to this thread.

Reply to this email directly, view it on GitHub

<#16?email_source=notifications&email_token=AEYAML7W7BCH256ILAU4YNTQS3LMTA5CNFSM4GXTFIO2YY3PNVWWK3TUL52HS4DFVREXG43VMVBW63LNMVXHJKTDN5WW2ZLOORPWSZGOEDUI4SI#issuecomment-552111689>,

or unsubscribe

<https://github.com/notifications/unsubscribe-auth/AEYAMLYAH2NBJM77SFO5UR3QS3LMTANCNFSM4GXTFIOQ>

.

|

|

OOM error with 8 GB GPU too. 774M works with 8GB on some cases but gives OOM on some cases. |

|

@ElvisJames Interesting. I didn't get OOM with 6GB GPU with 774M, however, I'm using 2nd GPU that reports 5750MB free and running 2 samples at once max (more will result in OOM, yes). Are you sure you cannot squeeze full model on 8GB GPU when it's not used by the system at all and running one sample? Or even limiting output size to 128 tokens? |

|

Full AGI release!: |

I understand your concerns but I still think it's better to release the full model now and let people poke at it's abilities and discover potential issues quicker.

The text was updated successfully, but these errors were encountered: